Vasudevan Mukunth is the science editor at The Wire.

The Columbia space shuttle lifts off on its STS-107 mission on January 16, 2003. Photo: mvannorden/Flickr, CC BY 2.0

- In 2016, a US company published a long, complicated report about the risk of a lab-leak being able to cause a pandemic.

- It didn’t have the intended effect on the US government, which subsequently reversed its moratorium on funding gain-of-function research.

- In 2003, the Columbia space shuttle disaster, which killed seven astronauts including Kalpana Chawla, was also rooted partly in improper risk communication.

- Both these incidents teach us that thorough communication is different in critical ways from effective communication.

In 2016, a company called Gryphon Scientific published a report that had been commissioned by the US government. It wrote in the report that virus research facilities had a small but non-zero risk of being able to cause a pandemic. It estimated that an accidental infection from a US-based influenza virus or coronavirus laboratory could happen once every three to 8.5 years and that the chance of that infection leading to a global pandemic was 0.4%.

More importantly, however, the report asked whether any estimate like theirs could be trustworthy: such risk analysis is quite complicated and subjective and attempts to reduce intricate problems like human error and system failure to one or a few numbers, which is always a reductive exercise. Rocco Casagrande, one of the report’s authors and cofounder of Gryphon Scientific, later said that his colleagues

“desired to show all our work undermined us, in that the report was just too complex. … I think a lot of people would have maybe hung on to that a bit more. But unfortunately, what we did is we wrote the Bible, right? And so you could basically just take any allegory you want out of it to make your case.”

That is, the report presented so much information that even people who had made up their minds in different ways could find information in the report to support their separate views.

Baruch Fischhoff, a risk analysis expert at Carnegie Mellon University, also said of the report (ibid.) that it was complex and very long. “It looked authoritative. But there was no sense of just how much – you know, what you should do with those numbers, and like most risk analyses, it’s essentially unauditable.”

In the end, the report apparently failed to influence the US government about how it should plan and fund virus research facilities and projects. The following year, the US National Institutes of Health (NIH) withdrew a moratorium on funding gain-of-function research it had implemented in 2014, and retained most of the same conditions. One scientist called the report and other risk-assessment efforts like it a “fig leaf” (ibid.) as a result.

Communicating risk

Communicating risk is not as easy as it may sound, as Shruti Muralidhar and Siddharth Kankaria have written: it requires special care and a sensitive use of language that presents the perceptible magnitude of a problem – together with the specific conditions in which the risk was assessed – without pressing the alarm button.

At the same time, the communication needs to be clear. The Gryphon Scientific report was long and involved, preventing its readers from assimilating a few important takeaways and using a few numbers to understand what they need to do next. The takeaways and the numbers are almost never restricted to a few – but if we don’t expect a report’s readers to understand the ins and outs of risk assessment analysis, we must find a way to distill what we’re trying to say into small chunks that can quickly and effectively inform important decisions.

The Gryphon Scientific report didn’t change the way the NIH did things, and we still need to think about how that may or may not have contributed to the COVID-19 pandemic. But there is an older example from history in which a group of experts didn’t go a good enough job of communicating risk, thus contributing to a terrible disaster that killed seven people.

STS-107

On January 16, 2003, NASA launched the Columbia space shuttle with a crew of seven people into low-Earth orbit. Eighty-two seconds after launch, a piece of form broke off from a part attaching the fuel tank to the shuttle, and collided with the shuttle’s left wing. NASA engineers were concerned whether the collision could have damaged a portion of the wing that protects the shuttle from the heat generated when it reenters Earth’s atmosphere. So they called up engineers at Boeing to assess the threat, while the space shuttle and its crew of seven had reached low-Earth orbit for a science mission.

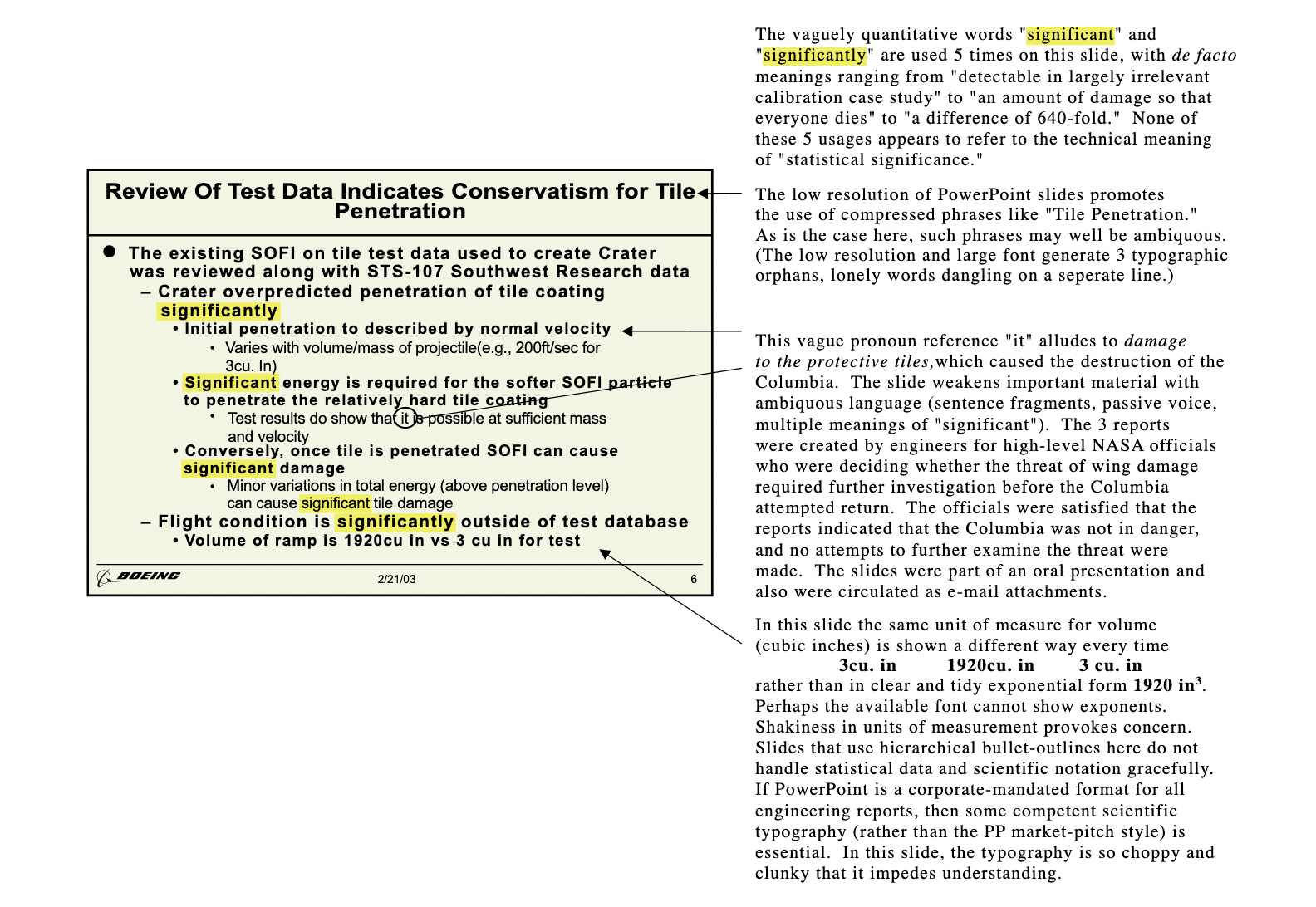

The Boeing team prepared a PowerPoint presentation to communicate what its members had found; the slide that presented the risk that faced NASA if it allowed the Columbia shuttle to reenter Earth’s atmosphere looked like this.

The NASA team concluded at the end of the Boeing presentation that Columbia could be allowed to reenter without endangering its crew. On the designated date, February 1, Columbia began its Earthward descent. But less than half-an-hour before the shuttle was scheduled to land, mission control lost contact with sensor readers on the shuttle and subsequently with the crew. Just four minutes before its landing, reports started to come in from people in and around Dallas that a large object could be seen disintegrating in the sky. All seven crew members were confirmed killed, including Kalpana Chawla.

The Columbia Accident Investigation Board released its final report in August 2003. Its verdict:

The physical cause of the loss of Columbia and its crew was a breach in the Thermal Protection System on the leading edge of the left wing, caused by a piece of insulating foam which separated from the left bipod ramp section of the External Tank at 81.7 seconds after launch, and struck the wing in the vicinity of the lower half of Reinforced Carbon. … During re-entry this breach in the Thermal Protection System allowed superheated air to penetrate through the leading edge insulation and progressively melt the aluminum structure of the left wing, resulting in a weakening of the structure until increasing aerodynamic forces caused loss of control, failure of the wing, and break-up of the Orbiter.

Tufte’s remarks

One of the additional investigators that NASA had hired was statistician and data-visualisation expert Edward Tufte, and he zeroed in – among other things – on the PowerPoint slides that the Boeing engineers had presented to NASA.

According to Tufte, the engineers had used the same words to mean different things in different sentences, font sizes that were disproportionate to their relative importance, and hierarchical bullet points to make non-hierarchical points. “If PowerPoint is a corporate-mandated format for all engineering reports, then some competent scientific typography (rather than the PowerPoint market-pitch style) is essential,” Tufte concluded. “In this slide, the typography is so choppy and clunky that it impedes understanding.” The board also wrote in its report:

As information gets passed up an organization hierarchy, from people who do analysis to mid-level managers to high-level leadership, key explanations and supporting information is filtered out. In this context, it is easy to understand how a senior manager might read this PowerPoint slide and not realize that it addresses a life-threatening situation. At many points during its investigation, the Board was surprised to receive similar presentation slides from NASA officials in place of technical reports. The Board views the endemic use of PowerPoint briefing slides instead of technical papers as an illustration of the problematic methods of technical communication at NASA.

There are two important lessons for science communicators here, including for scientists – in both the lab-leak risk assessment and the Columbia disaster assessment reports. First, science communication is neither science education nor an act of writing research papers. Being thorough in our articles, videos, podcasts, etc. won’t have the same effect as effective communication. In fact, they could have the opposite effect. Second, the tools, grammar and symbols of information design – a field to which Tufte has contributed – exist so that we can translate into words the thing that we have thus far only sensed. We must use them in a rational, non-arbitrary way.

Overall, it is important that we assimilate the information before us, with the help of experts and research articles, and then decide how best we can present what we have learnt to our audience, so that the takeaways are clear. And yes, we must make judgment calls about which takeaways are more important than others.